The emergence of powerful language models particularly OpenAI’s ChatGPT has redefined what’s possible when it comes to natural language processing (NLP) and AI-driven applications. By integrating ChatGPT into your Python projects, you can automate text generation, summarize content, answer questions, write code, and more all with a surprisingly high level of fluency and context-awareness.

In this comprehensive guide, we will explore, step by step, how to set up and use the ChatGPT API in your Python applications. We will cover everything from fundamental concepts to advanced techniques, including environment setup, prompt engineering, best practices, and integration strategies. By the end, you will be able to confidently build AI-enhanced Python apps using the ChatGPT API.

Table of Contents

Throughout these sections, we will dive into each topic in detail, illustrating how you can build a robust, AI-driven experience for your users.

Introduction to ChatGPT and AI-Enhanced Applications

What is ChatGPT?

ChatGPT is an advanced language model developed by OpenAI. It is part of the GPT (Generative Pre-trained Transformer) family, which uses deep learning algorithms and large-scale training data to understand and generate human-like text. Although ChatGPT excels at producing coherent and context-aware responses, it is not a general AI. Instead, it’s a specialized system designed to perform language tasks, such as:

- Answering questions: Provide succinct or in-depth responses to user queries.

- Text generation: Craft original text, including blog posts, social media content, stories, and more.

- Conversation simulation: Engage in back-and-forth dialogue for chatbots and support agents.

- Content summarization: Condense large pieces of text into shorter, more digestible summaries.

- Language translation: Translate text from one language to another (though specialized translation tools may sometimes yield more consistent results).

Why Use ChatGPT in Your Python Apps?

Python is a favorite for developers working with AI and data science. Its readability, extensive libraries, and active community make building AI solutions more accessible. By integrating ChatGPT into a Python application, you can:

- Enhance user experiences with natural language capabilities.

- Automate content creation for marketing or educational purposes.

- Streamline customer support via intelligent chatbots.

- Improve productivity by automating repetitive text tasks and generation.

- Perform complex text analysis, classification, or summarization without building your own NLP from scratch.

Use Cases and Potential Applications

Here are some popular use cases where ChatGPT can make your Python application more powerful:

- Customer Support Chatbots

Automate responses to common questions, provide detailed explanations, or guide customers through troubleshooting procedures. - Automated Writing Tools

Generate drafts for blog posts, product descriptions, and social media content, reducing writers’ block and saving time. - Interactive Tutorials and Learning Platforms

Provide on-demand explanations or clarifications for learners, simulating a tutor’s role. - Data Extraction and Summarization

Digest large sets of text data (like documents, articles, or research papers) and present concise, relevant information. - Code Generation and Assistance

Assist developers by generating snippets of code, explaining functionalities, or suggesting optimizations (though always verify the correctness).

As you can see, ChatGPT offers many possibilities for both personal projects and enterprise-scale applications.

Understanding the ChatGPT API

API Basics

OpenAI’s ChatGPT API (often referred to as the Chat Completions API) provides an interface to interact with GPT models via HTTP requests. You send a payload containing instructions and conversation context (known as a “prompt”), and the API responds with generated text. Key aspects include:

- Endpoint: The ChatGPT API typically uses an endpoint like

POST /v1/chat/completions. - Request Body: You supply a model (e.g.,

gpt-3.5-turboor newer versions likegpt-4), a list of messages, and various parameters to shape the output. - Response: A JSON response containing the text generated by ChatGPT, plus metadata like usage tokens and any relevant system messages.

- Billing: Charges are based on tokens processed (both in and out). Tokens roughly correlate to words or subword units.

Models and Versions

OpenAI periodically releases and updates various GPT models, each with different capabilities and price points. Notable models include:

- GPT-4o: Introduced in May 2024, GPT-4o (“o” for “Omni”) is a multimodal model capable of processing and generating text, images, and audio. It is twice as fast as GPT-4 and operates at half the cost. GPT-4o is available to all users within certain usage limits, with higher thresholds for ChatGPT Plus subscribers.

- GPT-4o mini: Released in July 2024, GPT-4o mini is a smaller, more cost-effective version of GPT-4o. It replaced GPT-3.5 Turbo in the ChatGPT interface and is designed for applications requiring efficiency and speed.

- o1: Launched in December 2024, the o1 model is engineered to tackle complex problems by dedicating more processing time before generating responses. This enhances performance in tasks involving competitive programming, mathematics, and scientific analysis. The o1 model is available to ChatGPT Plus and Team members.

- o1-mini: Introduced in September 2024, o1-mini offers a faster and more affordable alternative while maintaining robust reasoning capabilities. It is effective for science and coding-related tasks.

- o1-pro: Debuted in December 2024, o1-pro builds upon the o1 model by utilizing increased computational resources to deliver enhanced performance. This version is available exclusively through the ChatGPT Pro subscription.

- o3-mini: Released in January 2025, o3-mini succeeds o1-mini, offering improved performance and efficiency. It is suitable for users seeking advanced reasoning capabilities in a compact model.

- o3-mini-high: Also introduced in January 2025, this variant of o3-mini employs increased computational effort during response generation, further enhancing its reasoning abilities.

- GPT-4.5: Codenamed “Orion,” GPT-4.5 was released in February 2025. Described as a high-performance, resource-intensive model, GPT-4.5 offers the latest advancements in the GPT series.

Choosing the Right Model:

The ideal model depends on your specific use case. For advanced reasoning or tasks requiring high accuracy, models like o1 or GPT-4.5 are recommended. For general conversation and efficient text generation, GPT-4o or its mini variants are typically sufficient.

Key Parameters in the ChatGPT API

When making a request to the ChatGPT API, some parameters significantly influence the output:

- messages: This is an array of objects that give the conversation context. Each object has a

role(system, user, or assistant) andcontent. - temperature: Controls creativity. Higher values (e.g., 1.0) produce more varied outputs, while lower values (e.g., 0) make the model more deterministic.

- max_tokens: The maximum number of tokens in the response. Setting a limit helps manage cost and ensures responses remain concise if desired.

- top_p: Another sampling parameter that ensures the model only considers the most probable tokens. Often used instead of or in combination with

temperature. - frequency_penalty / presence_penalty: Adjust these to reduce repetition or encourage the model to introduce new topics.

Understanding these parameters allows you to fine-tune responses to best suit your application’s needs.

Prerequisites and Environment Setup

Basic Python Knowledge

You should be familiar with Python syntax and basic programming constructs—variables, functions, modules, and package management—before integrating the ChatGPT API. If you are building a web application, familiarity with frameworks like Flask or Django is beneficial.

Python Environment

Set up a dedicated Python environment (using venv, Conda, or a Docker container) to isolate dependencies. For example:

# Create and activate a virtual environment

python -m venv venv

source venv/bin/activate # On Linux/Mac

venv\Scripts\activate.bat # On Windows

Installing Required Libraries

You need the requests or httpx library (or any HTTP client you prefer) to make API calls. You may also want to install python-dotenv for managing environment variables. For instance:

pip install requests

pip install python-dotenv

Alternatively, you could use OpenAI’s official Python client library:

pip install openai

This library streamlines interactions with the ChatGPT API and is recommended for most use cases.

Obtaining and Managing Your API Key

Creating an OpenAI Account

To use the ChatGPT API, you must have an account on the OpenAI platform:

- Visit OpenAI’s website (note: links are typically provided, but ensure you have an account).

- Sign up or log in if you already have an account.

Generating an API Key

Once logged in, do the following to get your key:

- Navigate to your API Keys section.

- Create a new secret key.

- Copy and securely store it. You won’t be able to view it again later on the dashboard.

Storing and Securing Your API Key

Never store your API key in source code. Instead, use environment variables or an external configuration management system. For local development with python-dotenv, create a file named .env:

OPENAI_API_KEY=your_secret_key_here

Then, in your Python code:

import os

from dotenv import load_dotenv

load_dotenv()

api_key = os.getenv("OPENAI_API_KEY")

This ensures that your API key remains private and is not inadvertently shared in public repositories.

Basic Python Integration with the ChatGPT API

Using the OpenAI Python Library

The quickest way to integrate ChatGPT into Python is by using the official openai library. After installing it, you can make a request as follows:

import os

import openai

from dotenv import load_dotenv

load_dotenv()

openai.api_key = os.getenv("OPENAI_API_KEY")

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "user", "content": "Hello, how can I integrate ChatGPT into my Python app?"}

],

temperature=0.7,

max_tokens=100

)

print(response.choices[0].message['content'])

Explanation of Key Parts

model: Specifies which model to use ("gpt-3.5-turbo","gpt-4", etc.).messages: A list that simulates a conversation. The user’s message is{ "role": "user", "content": "..."}temperature,max_tokens: Modify output creativity and length.

Using the Requests or HTTPX Library (Alternative)

If you prefer a more manual approach, you can call the API directly with requests:

import os

import requests

import json

from dotenv import load_dotenv

load_dotenv()

api_key = os.getenv("OPENAI_API_KEY")

url = "https://api.openai.com/v1/chat/completions"

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {api_key}"

}

data = {

"model": "gpt-3.5-turbo",

"messages": [

{"role": "user", "content": "Hello, how can I integrate ChatGPT into my Python app?"}

],

"temperature": 0.7,

"max_tokens": 100

}

response = requests.post(url, headers=headers, json=data)

response_json = response.json()

print(response_json["choices"][0]["message"]["content"])

The result is essentially the same, but you have more direct control over the raw HTTP request.

Analyzing the JSON Response

Both methods return JSON. A typical successful response looks like:

{

"id": "chatcmpl-xyz123",

"object": "chat.completion",

"created": 1680281480,

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "Here's how you can integrate ChatGPT..."

},

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 10,

"completion_tokens": 20,

"total_tokens": 30

}

}

Important fields:

- id: Unique identifier of the completion request.

- choices: Holds response messages from the assistant.

- usage: Tracks how many tokens were used, crucial for cost management.

Prompt Engineering: Getting the Best from the Model

The Importance of Effective Prompts

A prompt is how you instruct ChatGPT. The quality of your output is directly related to how well you structure and phrase your prompt. Prompt engineering is all about:

- Providing clear instructions.

- Supplying necessary context or role definitions.

- Asking specific, targeted questions.

Roles in Messages

You can use different roles in the messages list to guide ChatGPT:

- system: Sets the overall behavior or persona of the AI (“You are a helpful assistant…”).

- user: Represents user queries or instructions.

- assistant: Represents the AI’s responses, useful for continuing context in multi-turn conversations.

Example:

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a Python expert and code reviewer."},

{"role": "user", "content": "Please review this Python code for efficiency:\n\n<code snippet>"}

]

)

Examples and Context

Providing examples within your prompt helps the model understand what format you expect for the answer. For instance, if you want ChatGPT to respond in JSON:

messages = [

{

"role": "system",

"content": "You are a JSON API. Always respond in valid JSON format."

},

{

"role": "user",

"content": "Give me a breakdown of the top 3 Python libraries for data analysis."

}

]

The system instruction guides ChatGPT to keep the response strictly in JSON format.

Tips for Better Prompts

- Clarity: Be explicit about what you want: “List 5 bullet points,” “Explain step-by-step,” etc.

- Context: Provide relevant data or background information if needed.

- Format: Specify the format in which you want the response.

- Iterate: Experiment with different prompts, measuring the effectiveness of each approach.

Prompt engineering can drastically improve the user experience and reliability of your AI-enhanced application.

Handling API Responses and Error Management

Handling Successful Responses

A successful response typically includes a status_code of 200 (OK). Your Python script should parse the JSON, extract the generated text, and incorporate it into the user’s workflow. For instance:

if response.status_code == 200:

content = response_json["choices"][0]["message"]["content"]

print(content)

else:

print(f"Error: {response.status_code} - {response.text}")

Common Errors and Status Codes

Even with a well-structured request, errors can occur. Common ones include:

- 401 Unauthorized: Invalid or missing API key.

- 429 Too Many Requests: Rate limit exceeded, or you’re sending requests too quickly.

- 500 Internal Server Error: An unexpected issue occurred on the server side.

Retrying and Backoff Strategy

When dealing with rate limits or transient errors, implement a retry mechanism with an exponential backoff. For example:

import time

import random

def make_request_with_retries(data, max_retries=5, base_delay=1):

for attempt in range(max_retries):

response = requests.post(url, headers=headers, json=data)

if response.status_code == 200:

return response.json()

elif response.status_code in [429, 500, 502, 503, 504]:

time.sleep(base_delay * (2 ** attempt) + random.random())

else:

# handle other error codes accordingly

break

return None

This approach can reduce the likelihood of hitting rate limits or losing requests due to transient server errors.

Advanced Usage and Customization

Streaming Responses

For real-time applications, streaming output tokens as ChatGPT generates them can be beneficial (e.g., a chatbot that displays messages as they arrive). The openai library provides a stream=True parameter:

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=messages,

stream=True

)

for chunk in response:

if 'content' in chunk['choices'][0]['delta']:

print(chunk['choices'][0]['delta']['content'], end='', flush=True)

This prints tokens as they are generated.

Controlling Output Style and Voice

You can shape the style, tone, or persona by carefully crafting system messages:

messages = [

{

"role": "system",

"content": (

"You are a formal assistant. Always respond with academic language, "

"backing up arguments with credible references and thorough explanations."

)

},

{

"role": "user",

"content": "Explain the concept of neural networks in a concise manner."

}

]

Adding Functionality with Tool Integration

ChatGPT can be used in conjunction with other Python libraries. For instance:

- Data analysis: Use ChatGPT to generate insights or textual descriptions, then pass data to Pandas, NumPy, or Matplotlib.

- Web scraping: Gather raw data from the web, feed it to ChatGPT for summarization or classification.

- Databases: Store user conversations or ChatGPT outputs for logging or further analytics.

Fine-Tuning (GPT Models)

Fine-tuning is supported for certain OpenAI models. Specifically, GPT-3.5-turbo now supports fine-tuning via OpenAI’s API, allowing users to customize the model with their own datasets to improve performance on specific tasks. This makes it suitable for building tailored applications like custom chatbots or domain-specific tools.

Older GPT-3 models (such as davinci, curie, etc.) also supported fine-tuning, though OpenAI is gradually shifting focus toward newer models.

While GPT-4 and GPT-4o do not yet support user fine-tuning, they allow for powerful customization through techniques like function calling, system prompts, and extended context (few-shot or even many-shot learning).

OpenAI may expand fine-tuning capabilities to newer models in the future, so it’s worth keeping an eye on updates if fine-tuning is a key part of your use case.

Cost Management and Rate Limiting

Token Usage and Billing

OpenAI charges per token (input and output). The total tokens used by both the prompt and the generated response factor into your usage. Monitor usage via:

- Usage Dashboard: On the OpenAI website to track overall spend.

- Response Usage Info: Each response includes a

usagefield (prompt_tokens, completion_tokens).

To save on costs:

- Shorten prompts: Remove unnecessary words and context.

- Use lower temperature: This does not directly reduce cost but helps reduce unneeded elaboration.

- Set max_tokens: Limit the response length.

Handling Rate Limits

OpenAI imposes rate limits depending on your account type. If you exceed the limit, you’ll receive 429 errors. Techniques to manage rate limits effectively include:

- Batching requests when possible.

- Caching results from repetitive or similar requests.

- Implementing a queue to spread out requests over time.

Monitoring Usage

Regularly check your usage and billing to ensure you remain within budget. Implementing automated alerts or usage thresholds can prevent unexpected costs.

Security Best Practices

API Key Protection

Your API key is sensitive. Leaking it publicly could let others use your account and charge you for tokens. Always use environment variables or a secure configuration management system.

Sanitizing User Input

If your Python application accepts user-provided text, be mindful that malicious actors may input harmful or malicious content. While ChatGPT attempts to limit harmful output, you remain responsible for checking and sanitizing:

- SQL injection: If you forward user content to a database, sanitize it first.

- HTML/JS injection: If you display user content in a web interface, consider escaping or sanitizing it to avoid cross-site scripting (XSS).

Usage Policies

OpenAI has usage policies regarding inappropriate content, hate speech, or disallowed uses. Ensure your application respects these policies to avoid account suspension or legal issues.

Deployment Strategies and Real-World Integrations

Command-Line Tools

You can build AI-driven CLI tools with Python and the ChatGPT API. For example, a content summarization script that processes text files and outputs condensed versions.

Web Applications (Flask or Django)

For interactive, user-facing experiences, integrate ChatGPT into a web application:

Flask:

from flask import Flask, request, jsonify

import openai

app = Flask(__name__)

openai.api_key = "..."

@app.route("/ask", methods=["POST"])

def ask_chatgpt():

user_query = request.json.get("question")

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[{"role": "user", "content": user_query}]

)

return jsonify({"answer": response.choices[0].message['content']})

if __name__ == "__main__":

app.run(debug=True)

Django: Create a dedicated view or endpoint for handling ChatGPT queries, returning JSON or rendering a template with the AI-generated response.

Desktop Applications (PyQt or Tkinter)

If you build desktop apps, you can embed ChatGPT queries in your interface to handle tasks like text generation or real-time assistance.

Microservices Architecture

In a service-oriented architecture, you can separate ChatGPT functionality into its own service, communicating through REST or gRPC. This design can help with scaling, versioning, and security.

Serverless Deployments

Platforms like AWS Lambda, Google Cloud Functions, or Azure Functions let you host your ChatGPT API calls without managing a full server. This can be cost-effective and easy to scale.

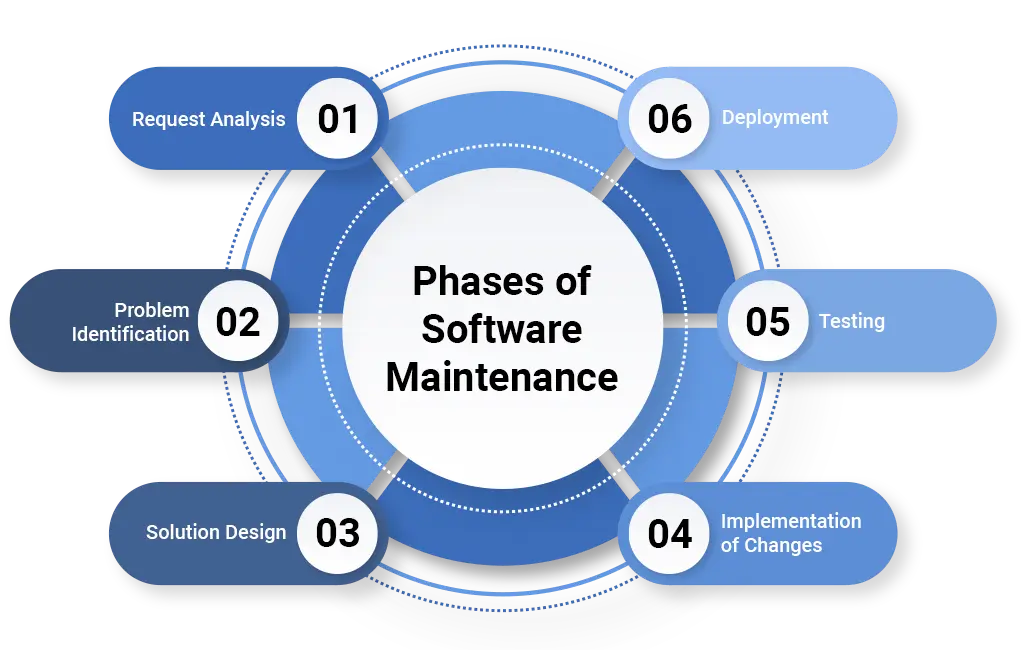

Testing and Maintenance

Unit Testing and Mocking

It can be costly and slow to query the ChatGPT API every time you run tests. Instead, mock the responses:

import unittest

from unittest.mock import patch

class TestChatGPTIntegration(unittest.TestCase):

@patch("openai.ChatCompletion.create")

def test_chatgpt_response(self, mock_create):

mock_create.return_value = {

"choices": [

{"message": {"content": "Mocked response"}}

]

}

# Run your function here, assert expected results

self.assertTrue(True)

Monitoring Application Performance

Keep tabs on latency, error rates, and usage patterns. Tools like Prometheus, Grafana, or third-party APM (Application Performance Monitoring) services can help track metrics.

Model Updates

OpenAI periodically updates their models. You may want to test new versions to evaluate performance, cost, and improvements. Keep your dependencies (like the OpenAI library) up to date.

Frequently Asked Questions

- Can I use ChatGPT for free?

OpenAI typically offers a free usage credit for new accounts, but the ChatGPT API is a pay-as-you-go service. Always check current pricing to stay updated. - Is ChatGPT secure for enterprise applications?

OpenAI maintains robust security practices, and data submitted to the API is not used to train models if you opt out. Always consult the latest OpenAI policy and handle sensitive data responsibly. - Which model should I use for my project?

gpt-3.5-turbois the most cost-effective for general conversation. If you need advanced reasoning or more comprehensive context handling,gpt-4might be more suitable (availability may vary). - How do I reduce token usage and cost?

Keep your prompts concise. Limitmax_tokensso your responses remain short if your use case allows it. - What if ChatGPT provides incorrect information or hallucinations?

Large language models can produce inaccuracies. It’s crucial to verify output for critical tasks and implement disclaimers or external validation as needed. - Can I fine-tune ChatGPT?

Currently, ChatGPT does not support the same fine-tuning approach older GPT-3 models do. However, advanced context and role-based prompting can achieve specialized behavior. - Is ChatGPT multilingual?

Yes, it can understand and generate text in multiple languages, but performance varies based on language and complexity.

Conclusion

Building AI-enhanced Python applications has never been more accessible. With the ChatGPT API, you can integrate advanced language capabilities into projects ranging from small-scale scripts to large enterprise systems. Here’s a quick recap:

- Set Up Your Environment: Install Python packages, secure your API key, and establish a stable development environment.

- Understand the API: Familiarize yourself with the endpoints, models, and parameters that shape ChatGPT’s behavior.

- Master Prompt Engineering: How you phrase your requests is crucial. Provide context, specify format, and test different prompts for best results.

- Handle Errors and Costs: Implement robust error handling, plan for rate limits, and monitor token usage.

- Deploy Confidently: Use modern infrastructure like containers, serverless functions, or microservices to ensure reliable and scalable operation.

- Stay Informed: Keep track of OpenAI’s model updates, API changes, and evolving best practices in NLP.

With these foundations, you can craft intelligent chatbots, auto-generate content, analyze text, or build creative new solutions. AI-driven technology is advancing rapidly, and by integrating ChatGPT with Python, you place yourself at the forefront of this transformative movement.

Whether you’re a hobbyist experimenting with interesting text generation projects or an entrepreneur building a scalable product, embracing ChatGPT’s capabilities will open new doors for innovation. Continue exploring the numerous possibilities, refine your prompts, and watch your Python applications come to life with AI-enhanced, natural language functionality.