Prompt engineering is an increasingly popular topic in the world of artificial intelligence (AI) and natural language processing (NLP). As advanced language models such as GPT-3.5, GPT-4, and other sophisticated transformer-based architectures continue to reshape the tech landscape, the way we communicate with these models through prompts has become a critical aspect of their success. This comprehensive guide will walk you through the fundamentals and intricacies of advanced prompt engineering for Python projects, providing you with detailed explanations, examples, and best practices.

Throughout this article, you will discover:

- The core concepts behind prompt engineering and why it’s indispensable for modern Python-based AI applications.

- Various prompting strategies (zero-shot, few-shot, chain-of-thought, etc.) and how to apply them effectively.

- Practical examples that illustrate how to structure prompts for different use cases, from text classification to code generation.

- Tools and libraries (OpenAI, Hugging Face, LangChain, etc.) that will help you integrate prompt engineering seamlessly into your Python workflow.

- Best practices, pitfalls, and testing methodologies to ensure optimal model performance in real-world scenarios.

Whether you’re a data scientist building a question-answering system, a software engineer integrating AI-driven code assistants, or a product manager exploring chatbots and language automation, this guide will serve as your comprehensive resource for advanced prompt engineering in Python.

Table of Contents

What Is Prompt Engineering?

At its core, prompt engineering is the art and science of crafting inputs (prompts) that guide language models to produce the most relevant, accurate, and coherent outputs possible. Instead of simply feeding raw text to an AI model and hoping for the best, prompt engineering applies systematic strategies, such as providing clear instructions, examples, and context, to shape the model’s responses.

Think of a prompt as a conversation starter between you (the user or developer) and the language model. By fine-tuning how you frame your questions or requests, you have a significant influence over the final output. For instance, a prompt like:

“Explain how the Python

map()function works.”

may yield a straightforward technical answer. However, a more detailed prompt that specifies the style, depth, or intended audience can yield far more targeted and useful results:

“Explain how the Python

map()function works in simple terms for a beginner Python programmer, and provide two concrete code examples.”

This second prompt offers clearer direction, leading the model to provide an educational explanation complete with illustrative examples.

Why Prompt Engineering Matters in Python Projects

Python has become the de facto language in many areas of AI and machine learning, boasting an extensive ecosystem of libraries (NumPy, TensorFlow, PyTorch, etc.) and a large community of developers. As large language models gain traction in Python-based workflows, the way we design prompts can directly impact:

- Accuracy of Results: Well-crafted prompts reduce ambiguity, leading to outputs that are more relevant to the task at hand.

- Efficiency: A well-engineered prompt can save time and computational resources by minimizing the need for repeated calls to the model.

- User Experience: In products like chatbots or code assistants, high-quality prompts produce more natural, insightful, and satisfying interactions.

- Scalability: Prompt engineering is crucial in large-scale applications where large volumes of text are processed. Efficient prompts reduce overall operational costs.

- Ethical and Safe AI Deployment: Thoughtful prompting reduces the risk of generating harmful, biased, or misleading content.

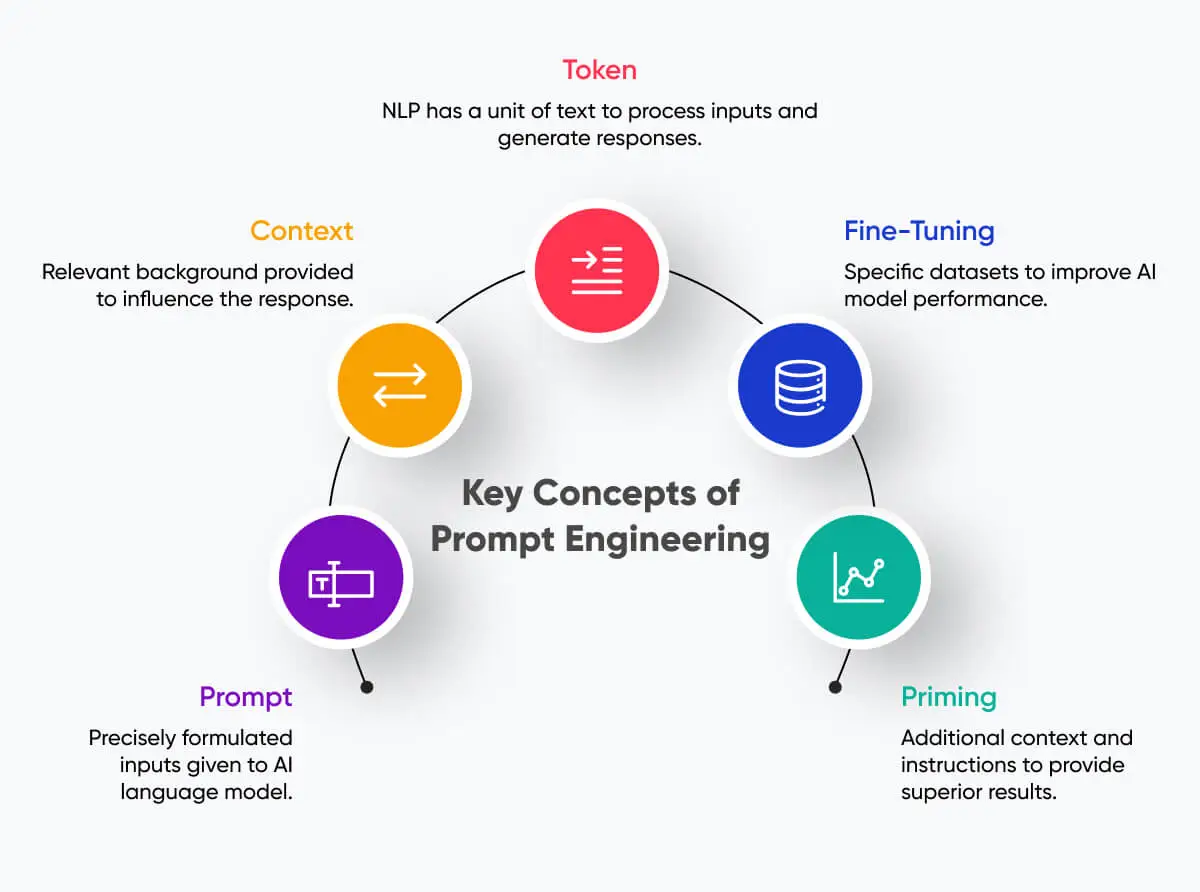

Core Concepts of Prompt Engineering

Before diving into advanced strategies, it’s essential to understand several foundational concepts that underpin prompt engineering.

1. Natural Language Processing (NLP) and Large Language Models (LLMs)

Large Language Models (LLMs) like GPT-3.5, GPT-4, and others are capable of understanding and generating text based on patterns they’ve learned from vast amounts of training data. Their ability to produce coherent text stems from statistical relationships between words, phrases, and broader contexts. This context-awareness is what makes prompt engineering both powerful and necessary: the model’s response hinges on how well you guide its “understanding” of the desired outcome.

2. Context Windows

All language models have a context window, which is the maximum number of tokens (sub-word units) they can process at once. If your prompt and the model’s output exceed the context window, the earliest parts of the text may get truncated, affecting the overall coherence of the conversation. When engineering prompts, you must be mindful of:

- Prompt Length: Ensure your prompt isn’t too long to fit into the context window.

- Relevant Context: Prioritize the most important information and instructions to help the model focus on the crucial parts of the task.

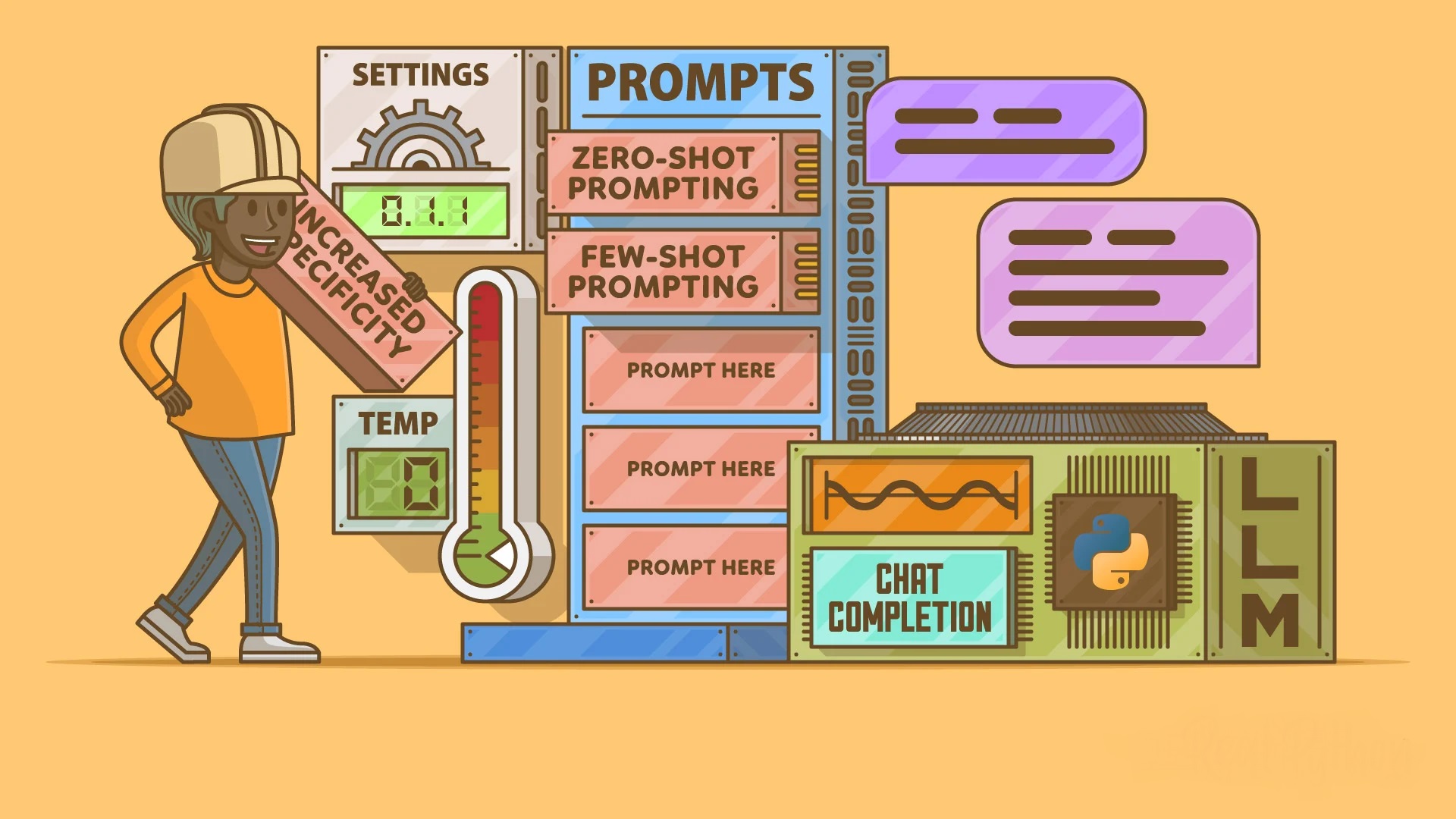

3. Model Behavior and Temperature

Models like GPT-3.5 or GPT-4 have hyperparameters that control how deterministic or “creative” they are. Two commonly referenced parameters are:

- Temperature: Determines randomness in text generation. A higher temperature (>1) encourages more varied outputs, while a lower temperature (<1) guides the model toward more deterministic answers. In Python’s OpenAI API, you can set this parameter, which directly affects how your prompt influences the output style.

- Top-k and Top-p: Techniques that control how many tokens the model considers when generating each new token. Lower values make outputs more predictable; higher values can yield more creative or unexpected results.

4. Creativity vs. Control

Prompt engineers must strike a balance between creativity and control. In tasks like short story generation, you may want to encourage a more creative output by adjusting temperature and letting the model roam free. On the other hand, when generating code or extracting data, you’ll want tightly controlled outputs to ensure accuracy and consistency. The prompt itself can specify how creative or strict the model should be:

“You are a Python expert. Please provide a concise, step-by-step solution to the following coding problem without any additional commentary or explanation.”

By clearly instructing the model, you reduce the chance of random or confusing outputs.

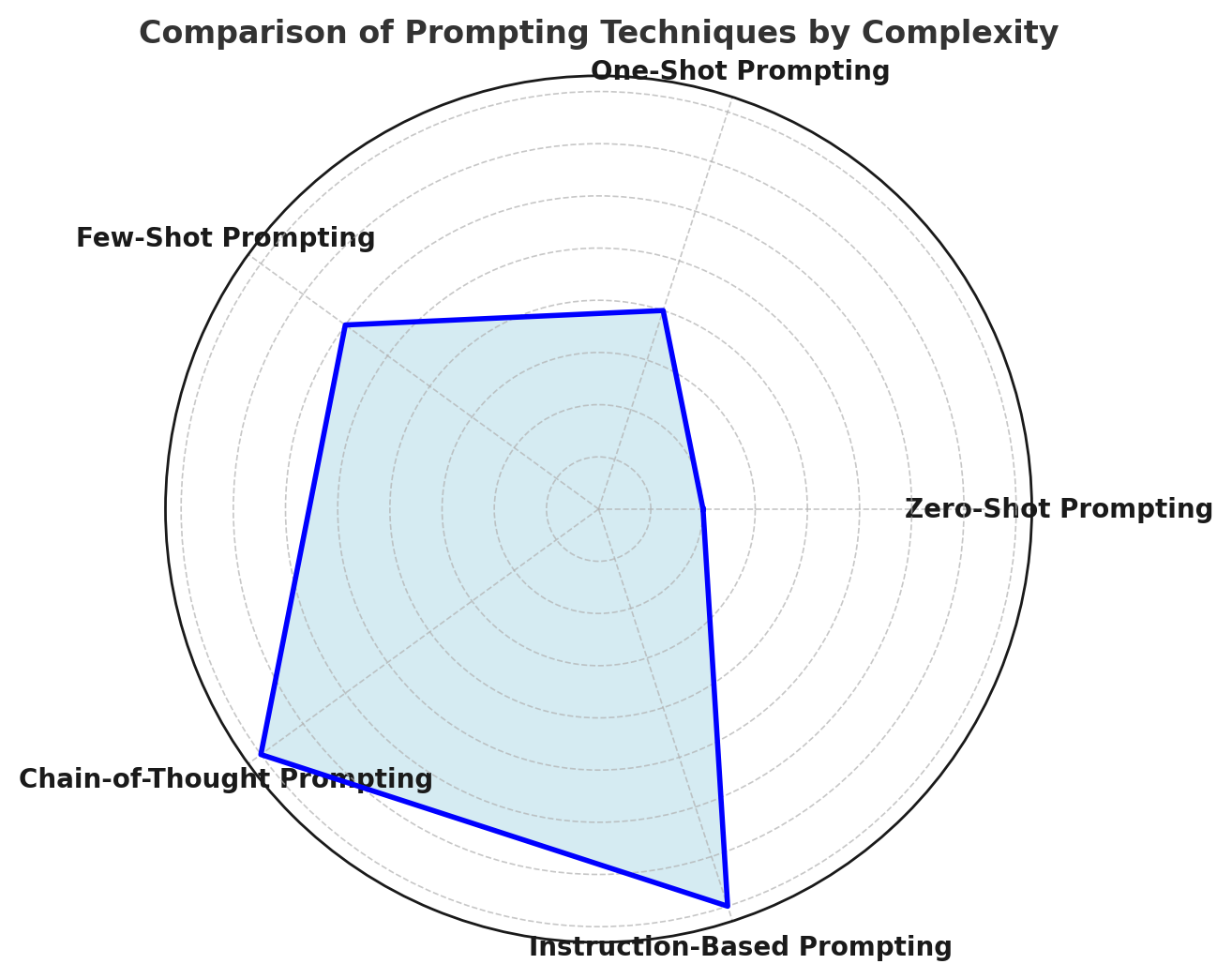

Types of Prompting Techniques

Prompt engineering often revolves around several core prompting strategies. Each strategy has its unique advantages and use cases. Here are the primary prompting paradigms you should understand:

1. Zero-Shot Prompting

Zero-shot prompting means you’re giving the model a task without showing any examples of the desired output. Instead, the model relies solely on your instruction and its general knowledge to generate the result. This approach is useful when:

- The task is straightforward or widely covered by the model’s training.

- You want to test how well the model performs without explicit examples.

For instance, a zero-shot prompt for summarization might be:

“Summarize the following article in one paragraph: [article text].”

2. One-Shot Prompting

In one-shot prompting, you give the model exactly one example of input and the corresponding desired output. This technique can significantly improve the model’s performance by clarifying the task format. Here’s a simplified example:

Instruction: “Convert sentences to a more formal style.”

Example:

- Input: “Hey, how’s it going?”

- Output: “Hello, how are you doing today?”

New Input: “What’s up?”

The model learns from the single example how to format the text. It will then try to replicate that style for “What’s up?”

3. Few-Shot Prompting

Few-shot prompting involves providing multiple examples to demonstrate how you want the model to behave. This approach is particularly powerful for tasks where additional context or variety is needed. For example:

Instruction: “Rewrite the following informal statements in a polite, professional tone.”

Example 1:

- Input: “Send me that file ASAP.”

- Output: “Could you please send me that file at your earliest convenience?”

Example 2:

- Input: “Tell the boss I’m sick of these meetings.”

- Output: “Please inform my manager that I would prefer to reduce the frequency of these meetings.”

New Input: “Get me the latest project update right away!”

By providing two examples, you clarify the pattern and style, increasing the likelihood the model will produce an accurate, consistent output.

4. Chain-of-Thought Prompting

Chain-of-thought prompting is a technique where you instruct the model to detail its reasoning process. Although some of the largest LLMs do not always reveal internal reasoning by default, you can encourage the model to outline its steps. This can be particularly helpful in reasoning tasks (math problems, logic puzzles, advanced question-answering):

“Explain your reasoning step by step, then provide the final answer.”

However, note that exposing chain-of-thought can sometimes result in overly verbose or less direct answers. It can be great for internal debugging but might not be ideal for end-user consumption. You can still use the chain-of-thought approach behind the scenes to improve answer accuracy, then summarize the final result in a more concise form.

5. Instruction-Based Prompting

Instruction-based prompting involves giving explicit instructions, often in a structured format, to guide the model’s output. This style is beneficial in enterprise or production settings where consistency and reliability are paramount. An example might look like this:

- Provide a summary of the text below.

- Identify the main topic.

- Offer one suggestion for further reading.

By enumerating tasks, you reduce ambiguity and help the model understand the required format.

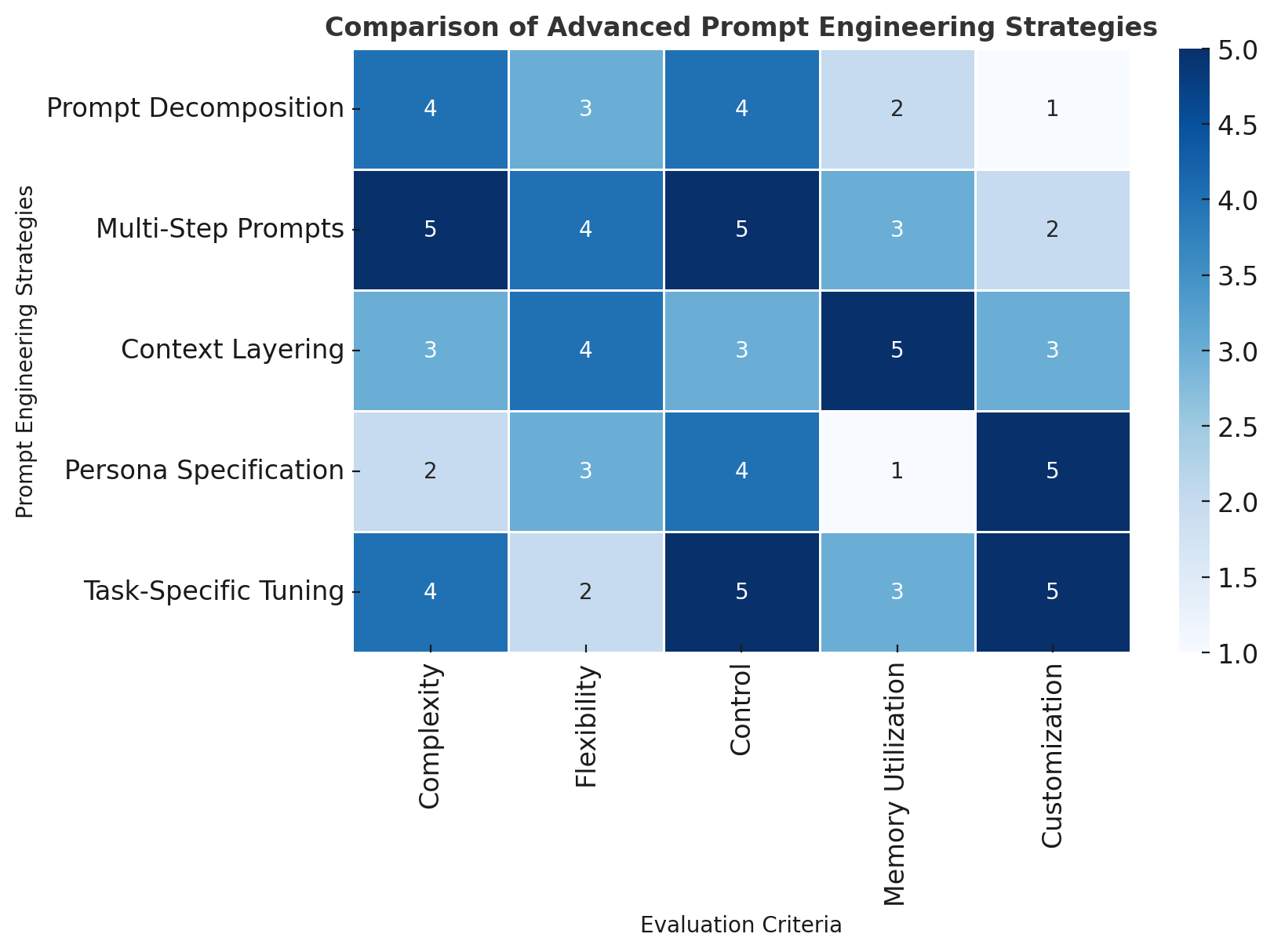

Advanced Prompt Engineering Strategies

With the foundational concepts covered, we can now explore advanced strategies that seasoned developers and data scientists often use to elicit nuanced, highly controlled outputs from language models.

1. Prompt Decomposition

Rather than tackling a complex question or task directly, prompt decomposition involves breaking down a question into smaller, more manageable parts. For example, if you have a multi-faceted request, you can first ask the model to solve sub-tasks, then aggregate results in a final step.

Example

Instead of:

“Explain the concept of gradient descent, compare it to stochastic gradient descent, and then provide three real-world use cases.”

You can break it down as:

- “Explain the concept of gradient descent in simple terms.”

- “Compare gradient descent with stochastic gradient descent.”

- “Provide three real-world use cases for gradient descent.”

This approach often yields clearer, more structured responses and reduces the likelihood of the model mixing up different parts of the answer.

2. Building Multi-Step Prompts

Multi-step prompts are an extension of prompt decomposition, where you make multiple calls to the model in a pipeline. For example:

- Generate a list of ideas: “Suggest five potential machine learning project ideas.”

- Refine an idea: “For idea number 3 from the previous list, provide a high-level project plan.”

- Detail a sub-component: “Within that project plan, elaborate on the data preprocessing steps.”

This approach lets you maintain control at each stage while leveraging the model’s capabilities iteratively. It also helps you manage context window limitations by summarizing outputs and passing only the crucial information to the next step.

3. Context Layering with Memory

When building conversational systems or chatbots, you often need to maintain a certain “memory” of previous exchanges. This is especially important for large models with limited context windows. Context layering helps you summarize or store relevant information from earlier turns and append it to the prompt in subsequent interactions.

Technique:

- Keep a running summary of the conversation or crucial data points.

- Only pass along the relevant pieces of that summary to each new prompt to keep the conversation consistent.

For instance, in an FAQ bot:

- User asks about Python version compatibility.

- The model answers.

- Summarize that the user is concerned about “Python 3.9 or higher” and store it.

- In the next user query, the system appends that summary, so the model remembers the user is working with “Python 3.9 or higher.”

4. Persona and Role Specification

You can drastically alter a model’s output by assigning it a specific persona or role. This helps the model adopt a certain tone, style, or perspective. For instance:

“You are a Python tutor who specializes in data science for beginners. Please explain linear regression in layman’s terms.”

Versus:

“You are a senior machine learning engineer. Please provide a formal, in-depth explanation of linear regression, referencing mathematical formulas.”

This technique is powerful in building specialized assistants or targeted content generation systems, ensuring the model’s style and complexity match user expectations.

5. Task-Specific Tuning

Though not strictly “prompt engineering,” task-specific tuning (or fine-tuning) refers to customizing a base model for a specialized domain. If you’re working with a model that allows fine-tuning (like some GPT variants), you can train it on examples of the exact kind of output you want. The synergy of fine-tuning plus advanced prompt engineering yields highly reliable, domain-specific results.

Tools and Libraries for Prompt Engineering in Python

Python offers a rich ecosystem for working with large language models. Below are some of the most widely used tools and libraries that help you streamline prompt engineering efforts.

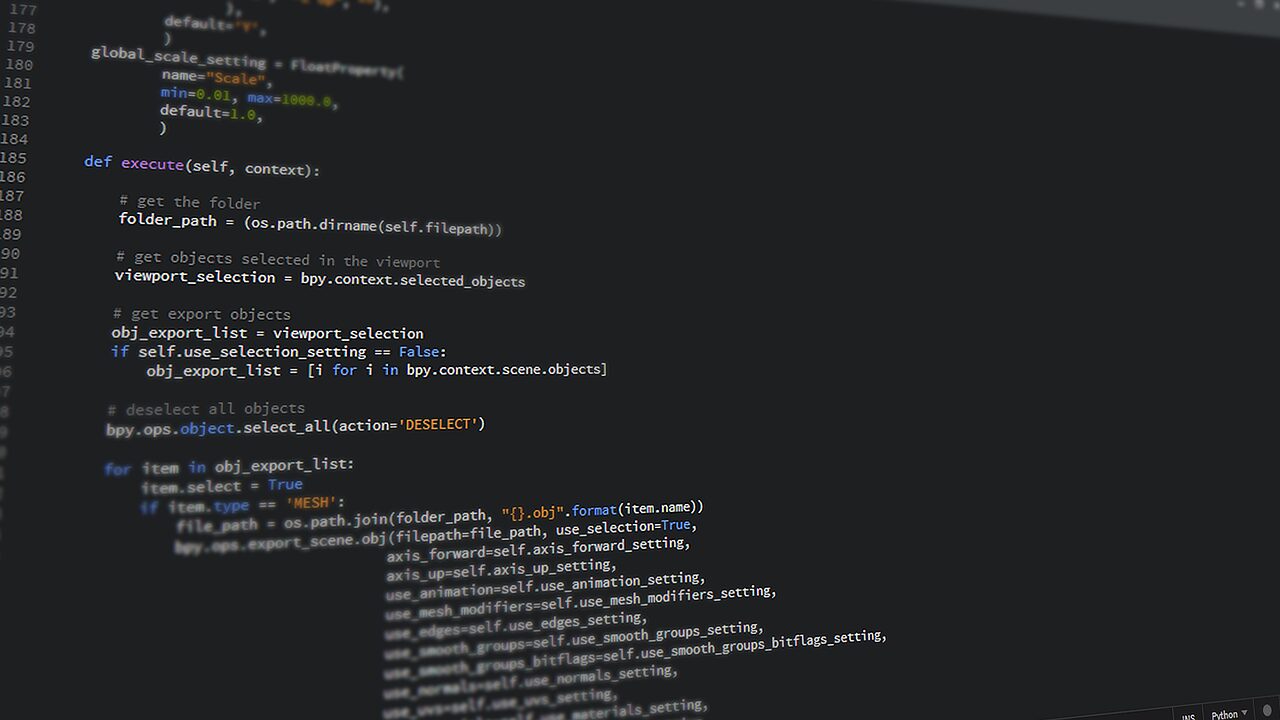

1. OpenAI API

The OpenAI API provides programmatic access to GPT-3.5, GPT-4, and other OpenAI models. You can craft prompts, set parameters (temperature, max tokens, etc.), and retrieve model outputs directly in your Python code. The typical workflow involves:

import openai

openai.api_key = "YOUR_API_KEY"

response = openai.Completion.create(

engine="text-davinci-003",

prompt="Explain the Python map() function with an example.",

temperature=0.7,

max_tokens=150

)

print(response.choices[0].text.strip())

Key advantages:

- Easy integration with Python

- Access to various model endpoints

- Configurable parameters for controlling output

2. Hugging Face Transformers

The Hugging Face Transformers library is a go-to resource for open-source LLMs (such as GPT-2, GPT-Neo, BLOOM, and others). It gives you the flexibility to load models locally or use the Hugging Face Hub:

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

model_name = "gpt2"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

prompt = "Explain what tokenization is in NLP."

input_ids = tokenizer.encode(prompt, return_tensors='pt')

output = model.generate(input_ids, max_length=100, temperature=0.7)

response = tokenizer.decode(output[0], skip_special_tokens=True)

print(response)

You can implement advanced prompting techniques, such as few-shot learning or chain-of-thought, using the same approach: carefully constructing the prompt string before tokenizing.

3. LangChain

LangChain is a relatively new but rapidly growing framework focused on building applications around language models, often referred to as LLMOps. It simplifies the creation of multi-step pipelines, retrieval-based prompting, and integration with other data sources. Its features include:

- Chains: Multi-step workflows where each step can refine or transform the model’s output.

- Memory: Methods to keep track of conversation history or other relevant context.

- Agents: Tools that let LLMs interact with external data sources or APIs.

LangChain can be invaluable for advanced prompt engineering because it provides built-in primitives for passing context forward and modularizing your prompt-building approach.

4. GPT Index (LlamaIndex)

Formerly known as GPT Index, LlamaIndex helps integrate external data (documents, databases, etc.) with large language models. It allows you to structure your data in indexes (tree, list, keyword index, etc.) so the model can fetch relevant information more efficiently. This is key for advanced prompt engineering when your tasks require up-to-date or domain-specific data.

5. Pinecone and Other Vector Databases

Prompt engineering often involves dealing with relevant context retrieval, especially if your project requires referencing large amounts of text. Pinecone, FAISS, and Weaviate are examples of vector databases that can store embeddings of your text data for efficient similarity search. By retrieving only the most relevant chunks of text to include in your prompt, you can optimize both the accuracy of responses and the token usage.

Designing Prompts for Specific Use Cases

Now that you have a solid understanding of advanced prompting strategies and the Python tools available, let’s explore how to design prompts for common real-world tasks.

1. Text Classification

Goal: Classify a piece of text into predefined categories (e.g., sentiment analysis, topic classification).

Few-Shot Prompt Example:

Text: "I love using Python for data analysis!"

Category: Positive

Text: "The plot was terrible, and the characters were boring."

Category: Negative

Text: "The battery life on this phone is outstanding."

Category:

By providing examples, you guide the model toward correctly identifying the sentiment of the next text snippet.

2. Summarization

Goal: Condense a long text into a concise summary.

Instruction-Based Prompt:

1. Summarize the following article into two paragraphs.

2. Focus on the article’s main arguments and conclusions.

3. Omit any trivial or background information.

Article: [full article text]

Breaking down the instructions ensures a well-structured summary with emphasis on the main arguments.

3. Code Generation

Goal: Generate functional code snippets or entire scripts based on textual descriptions.

Multi-Step Prompt Example:

- “I want a Python script that scrapes titles from a given website.”

- (Model outputs a skeleton script)

- “Improve the code by adding error handling and logging.”

By iterating, you gradually refine the code. Always test the generated code in a safe environment to verify correctness and security.

4. Conversational AI

Goal: Build a chatbot or conversational agent that can maintain context, answer questions, or provide assistance.

Context Layering Example:

- Prompt includes user’s previous questions and the model’s answers, truncated or summarized to fit within the context window.

- The new user query is appended at the end, with instructions to maintain the conversation style.

5. Data Extraction

Goal: Extract structured data (e.g., names, dates, addresses) from unstructured text.

Few-Shot Prompt Example:

Text: "John Smith visited Berlin on May 5, 2022."

Extract: {"Name": "John Smith", "Location": "Berlin", "Date": "May 5, 2022"}

Text: "Jane Doe arrived in Paris on June 10, 2021."

Extract:

By showing how to format the extracted data, you clarify the task for the model.

Best Practices for Prompt Engineering

Every AI-driven Python project has unique requirements, but certain best practices consistently apply across domains:

1. Clarity and Structure in Prompts

- Use plain language: Write clear, concise instructions that reduce ambiguity.

- Format: Leverage bullet points, numbered instructions, or markup to structure your prompt. This helps the model parse instructions more reliably.

2. Providing Examples and Context

- Examples: Provide labeled examples that illustrate your desired output format whenever possible.

- Context: Include relevant background info but avoid overloading the prompt with unnecessary details.

3. Iterative Refinement

- Start simple: Begin with a straightforward prompt and see what the model outputs.

- Refine in steps: Adjust the prompt, add or remove examples, and tweak parameters based on the model’s performance.

4. Token and Cost Management

- Token Limits: Stay mindful of the context window. Summarize or omit less crucial information if you risk going over the token limit.

- Cost: Each token used in a prompt costs money (in APIs like OpenAI), so ensure your prompts are streamlined for cost-effectiveness.

5. Ethical and Fair Usage

- Bias: Acknowledge that language models may reflect biases from training data. Prompting can exacerbate or mitigate these biases.

- Safety: Avoid prompts that elicit harmful, hateful, or sensitive information. Incorporate content filters if your use case might encounter these issues.

- User Privacy: When dealing with personal data, ensure compliance with relevant regulations.

Common Pitfalls and How to Avoid Them

Even advanced practitioners can fall into certain pitfalls when designing prompts. Here are a few common issues and prevention strategies:

- Overcomplicating the Prompt: Too many instructions or excessive context can confuse the model.

- Solution: Simplify. Decompose large tasks into smaller steps.

- Ambiguous Instructions: Vague or contradictory instructions lead to inconsistent outputs.

- Solution: Provide clear, singular objectives and check the prompt for conflicting statements.

- Exceeding Context Window: Truncation can cause the model to forget important details.

- Solution: Summarize earlier parts of the conversation or break the conversation into smaller chunks.

- Ignoring Model Parameters: Using default parameters without experimentation can limit performance.

- Solution: Experiment with temperature, max tokens, and other parameters to find the best configuration.

- Lack of Testing: Relying on the first response without systematic testing can lead to unreliable outputs.

- Solution: Use a validation set or iterative approach, comparing the model’s responses against expected outputs.

Testing and Validation of Prompts

Testing and validation ensure that your prompt designs consistently yield correct and useful outputs. Approaches include:

- Automated Tests: Incorporate prompt-response checks into your Python test suite. For instance, you could have a set of known inputs and expected outputs (or approximate conditions) that the model should satisfy.

- A/B Testing: If you have multiple prompt variants, compare them in real-time with a subset of your users or data to see which yields better performance.

- Human Review: For sensitive or high-stakes tasks, manual inspection of outputs is often necessary. Real-world tasks, especially in healthcare or legal domains, benefit from domain experts reviewing model outputs to ensure safety and accuracy.

Real-World Examples and Case Studies

Case Study 1: Customer Support Chatbot

A software startup wants to provide a 24/7 support chatbot to answer frequently asked questions about their product. The team uses:

- LangChain for multi-step conversation management.

- OpenAI GPT-4 for language generation.

- Vector Database (Pinecone) to store and retrieve FAQ documents.

They design prompts that:

- Summarize user questions.

- Retrieve relevant FAQ data from Pinecone.

- Include the relevant data in the context.

- Provide a final user-friendly answer.

This approach ensures that each step is controlled, relevant context is included, and the chatbot remains coherent and accurate.

Case Study 2: Code Generation Assistant

A data science team is building an internal code-generation assistant to speed up their workflow. They use:

- Few-shot prompting to provide examples of properly formatted code.

- Multi-step pipeline where the first step clarifies the user’s coding goal and the second step generates the actual code snippet.

- One-step refinement to optimize or debug the code automatically.

By iterating on their prompt design, they minimize syntax errors and ensure the generated code adheres to internal style guidelines.

Conclusion

Advanced prompt engineering stands at the forefront of modern NLP and AI-driven applications, especially in Python. By thoughtfully designing prompts, understanding your model’s parameters, and iteratively refining your approach, you can unlock unprecedented performance and reliability from large language models like GPT-3.5, GPT-4, and beyond.

Key Takeaways

- Prompt clarity is crucial: Structuring prompts with explicit instructions, examples, and roles (personas) guides the model more effectively.

- Use advanced strategies: Techniques such as multi-step prompting, context layering, and chain-of-thought reasoning allow you to tackle complex tasks systematically.

- Leverage Python tools: Libraries like OpenAI API, Hugging Face Transformers, and LangChain simplify prompt experimentation, context management, and pipeline creation.

- Iterate and Validate: Always test prompts with real or representative data, adjusting parameters and structure to optimize results.

- Be mindful of ethics and cost: Control for potential biases, manage token usage, and adhere to relevant privacy regulations.

The Future of Prompt Engineering

As language models continue to evolve, so too will the techniques for prompt engineering. We’re already seeing innovations like system messages, function calling, and advanced fine-tuning options that give developers even finer control over model behavior. Mastering these concepts now ensures you’re well-positioned to build powerful, intelligent, and user-friendly AI applications with Python—regardless of how large or complex your project becomes.

By incorporating the methods discussed in this guide and constantly experimenting with new approaches, you’ll be able to harness the full potential of large language models, transform your Python-based AI workflows, and remain at the cutting edge of prompt engineering innovation.