OpenAI has once again captured the spotlight in the world of Artificial Intelligence (AI), as CEO Sam Altman recently announced that the company has finalized a version of its new advanced reasoning AI model, dubbed “o3-mini.” This unveiling comes at a time when the AI industry is witnessing rapid innovations, fierce competition, and growing mainstream adoption. The move signals the next phase in OpenAI’s quest to develop more powerful and efficient large language models that can handle increasingly complex problem-solving tasks.

Ever since the launch of ChatGPT in late 2022, OpenAI has set new benchmarks in natural language processing (NLP) and generative AI. The global popularity of ChatGPT sparked an influx of investments into AI firms, culminating in new products, new research, and widespread adoption of AI-powered tools in nearly every sector—from education to healthcare to finance. Now, with the forthcoming release of the o3-mini model, OpenAI aims to build on its prior success by offering an even more sophisticated solution for complex reasoning tasks while also meeting user demands in real-time.

Importantly, Altman noted in a post on X (formerly Twitter) that the o3-mini launch will coincide with the release of the application programming interface (API) and a corresponding ChatGPT update. This integrated approach marks a deliberate response to user feedback and underscores OpenAI’s commitment to delivering holistic AI solutions. In the past, the company has periodically launched advanced models with separate release timelines for the API and the ChatGPT interface, but the feedback from developers and end-users alike led OpenAI to synchronize these launches for better ease of use and broader access.

In this article, we will explore the historical context of OpenAI’s language models and look at how these new “reasoning AI” models differ from their predecessors. We’ll discuss the significance of the O1 model released in September 2024, which was among the first models to focus on complex problem solving across domains like science, coding, and mathematics. We will then delve into the o3 and o3-mini models, their anticipated capabilities, and how they might shape the AI landscape in the coming months. Finally, we’ll touch on OpenAI’s broader strategy, including the recent beta feature “Tasks” in ChatGPT, the competitive environment with major players like Google and Apple, and the implications of large-scale funding for AI ventures.

Table of Contents

A Brief History of OpenAI and ChatGPT

To appreciate the significance of o3-mini, it’s instructive to consider the evolution of OpenAI’s language models. Founded in 2015 with the mission to ensure that Artificial General Intelligence (AGI) benefits all of humanity, OpenAI has rapidly matured into one of the leading research organizations in the AI sector.

The Early Generations

- GPT (Generative Pre-trained Transformer): Released in 2018, the original GPT model set the foundation by introducing the concept of unsupervised pre-training on massive text corpora. Although it was innovative for its time, GPT had limitations in terms of context length, factual recall, and nuanced reasoning.

- GPT-2: Launched in 2019, GPT-2 was a substantial leap forward in terms of parameter count and language generation capabilities. It demonstrated the possibility of producing remarkably coherent text, raising questions about AI ethics, misinformation, and content moderation.

- GPT-3: Arriving in mid-2020, GPT-3 captured global headlines with its astonishing ability to generate human-like text. Boasting 175 billion parameters, GPT-3 fueled a surge of interest in generative AI and NLP applications like chatbots, content creation, and code generation.

The ChatGPT Revolution

- ChatGPT: In late 2022, OpenAI introduced ChatGPT to the public. This model was fine-tuned from the GPT-3.5 series, offering an interactive, conversational interface. Its popularity exploded, prompting millions of users to explore use cases from creative writing to debugging complex code.

- GPT-4: Although details are more guarded, GPT-4 debuted with improved reasoning, broader knowledge, and more robust guardrails for content moderation. GPT-4’s design paved the way for specialized, domain-specific AI applications in health, law, and finance.

- GPT-4o: OpenAI’s GPT-4o builds upon its predecessor with cutting-edge enhancements in adaptability, precision, and contextual depth. It introduces real-time decision-making capabilities, designed for dynamic environments and multi-modal inputs, paving the way for even broader applications across industries such as robotics, education, and personalized virtual assistance.

Laying the Groundwork for Advanced Reasoning

Each of these releases built on transformer architecture and massive datasets but fell short in certain reasoning-intensive tasks. The shift toward advanced reasoning models—like the upcoming o3 and o3-mini—underscores OpenAI’s strategic pivot: from focusing purely on language generation to deep, multi-step problem-solving.

In December 2022, OpenAI hinted that it was working on new reasoning models, tentatively labeled o3 and o3-mini, signifying next-generation leaps in the field. This direction is reinforced by the success of the o1 line, which we’ll discuss further in the next sections.

The Rise of Advanced Reasoning Language Models

While generative ability has long been the hallmark of OpenAI’s language models, reasoning is an even more demanding aspect of AI development. Reasoning involves multi-step logic, the ability to analyze contradictory information, and a facility for solving complex, domain-specific problems. Historically, large language models have excelled in producing fluent text, but frequently faltered when faced with detailed logical puzzles, advanced mathematics, or tasks requiring sustained contextual processing.

Why Reasoning Models Are a Big Deal

- Higher Accuracy: Advanced reasoning models can theoretically achieve higher correctness in their responses, making them invaluable for critical applications such as medical diagnosis, legal document analysis, or financial forecasting.

- Complex Problem-Solving: They can break down multifaceted inquiries—whether in science, software development, or business intelligence—into smaller steps, analyzing each before generating a consolidated answer.

- Innovation in Human-AI Collaboration: More sophisticated reasoning paves the way for deeper human-AI collaboration. Researchers, students, and professionals can leverage these models to co-pilot through complex tasks, such as writing academic papers or designing intricate software systems.

- Beyond Surface-Level Understanding: Traditional chatbots might summarize texts or produce cohesive paragraphs, but advanced reasoning bots can interpret data, draw logical inferences, and even propose hypotheses, edging closer to true intelligence rather than mere textual mimicry.

O1, O3, and O3-mini: The New Breed

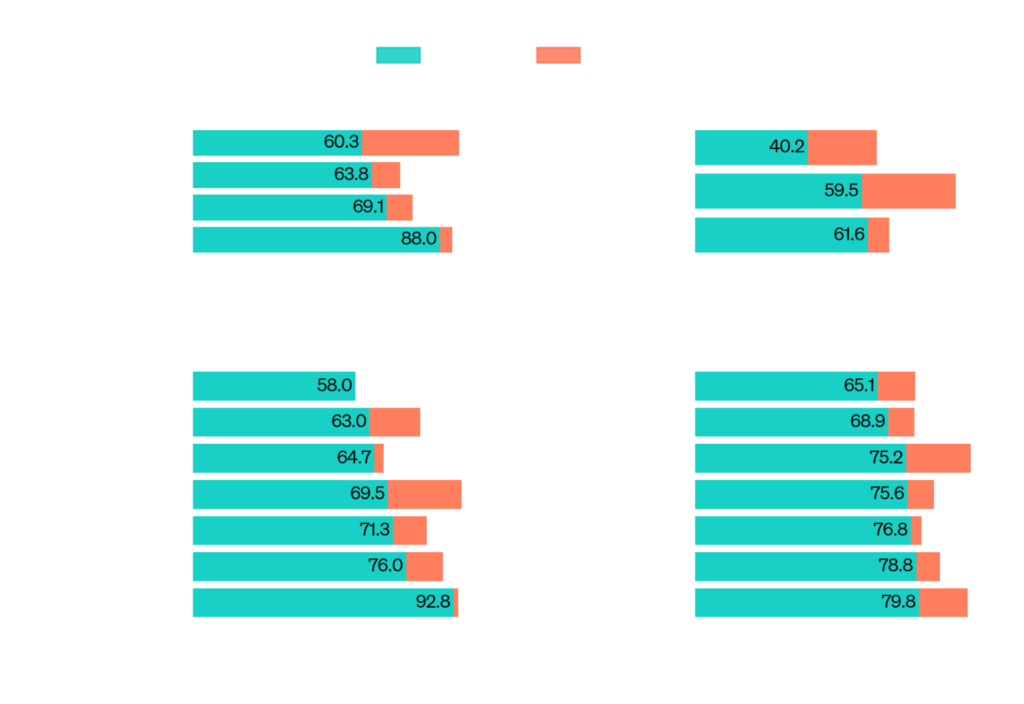

OpenAI’s unveiling of o1 in September 2024 marked a key milestone, positioning it as a model that could tackle harder problems. The success of o1 has set the stage for the upcoming o3 series. With o3-mini functioning as a streamlined version, OpenAI hopes to diversify the accessibility of advanced reasoning models—making them cost-effective and more widely deployed, while also ensuring they can handle complex tasks at scale.

Overview of the O1 Model: A Leap Forward in Complex Problem-Solving

Before diving deeper into o3-mini, it’s worthwhile to look more closely at the o1 model. Launched in September 2024, the o1 models represented a strategic pivot for OpenAI, focusing on reasoning rather than purely generative tasks. In many ways, O1 laid the blueprint for the upcoming O3 series.

1. Core Capabilities of the O1 Model

- Extended Context Window

One of the hallmark features of o1 was a longer context window, enabling the model to handle extended conversations or texts without losing track of prior details. This improvement drastically reduced the frequency of repetitive or contradictory answers, making o1 significantly more reliable for complex dialogues. - Multi-Step Logical Reasoning

The o1 model introduced a multi-step reasoning process. Rather than generating an answer in a single pass, o1 effectively “thought through” questions, allowing it to handle tasks like multi-operation math problems, logical deductions, or scenario-based queries more adeptly. - Domain Versatility

OpenAI showcased o1’s performance across several domains:- Science: The model could interpret experimental data, reason about cause-and-effect in scientific studies, and provide structured explanations of complex topics (e.g., quantum mechanics).

- Coding: O1 handled advanced programming challenges and code reviews, often generating robust solutions that rivaled or even surpassed prior GPT models.

- Mathematics: It efficiently tackled advanced algebra, calculus, and even proof-based problems, showcasing a significantly reduced tendency to produce arithmetic or logical errors.

- Improved Error Handling

Building on user feedback, o1 introduced better error self-correction. If the model recognized potential contradictions in its own reasoning, it would often revise and refine its answers. Although still not perfect, this feature represented a step toward more autonomous and self-aware problem-solving.

2. Impact on Science, Coding, and Math

The o1 series opened new possibilities for researchers, developers, and students:

- Scientific Research: With advanced reasoning, o1 was able to produce hypotheses, design basic experimental methods, and even critique research questions. This helped accelerate literature reviews and conceptual frameworks in various scientific fields.

- Software Development: By parsing large codebases, explaining logic in existing scripts, and debugging with greater accuracy, o1 proved invaluable for developers faced with complex programming tasks. The ability to generate code that adheres to best practices saved significant time.

- Mathematical Prowess: Students and educators benefited from the model’s improved math capabilities, using it as a study partner for advanced problem sets or as a tool to verify calculations in engineering and financial simulations.

3. Examples Illustrating O1’s Advanced Reasoning

- Scientific Example

Question: “Explain how a mismatch repair mechanism might fail in a DNA replication scenario and suggest an experiment to detect the mismatch.”

O1’s Response (Simplified):- First, o1 provided an overview of mismatch repair mechanisms in cells, citing well-known enzymes like MSH2 and MLH1.

- Next, it explained potential failure points, such as mutation in the MLH1 gene.

- Finally, it suggested a PCR-based experiment targeting the mutated regions and recommended analyzing the resulting amplicons via gel electrophoresis to confirm the presence of mismatches.

- Coding Example

Question: “Write a function to convert an array of integers into a single integer representation in base-36, ensuring the function handles large values.”

O1’s Response (Simplified):- Provided a well-structured function in Python, including error handling for incorrect inputs.

- Explained the logic step-by-step, detailing how each array element was converted into base-36 and concatenated.

- Offered potential optimizations for extremely large arrays, such as chunk processing.

- Mathematics Example

Question: “Prove that the sum of angles in a Euclidean triangle is 180 degrees, but show how this changes on a spherical surface.”

O1’s Response (Simplified):- Outlined a geometry proof of a triangle on a plane via parallel lines and angle chasing.

- Showed that the sum of angles in a Euclidean triangle is exactly 180 degrees.

- Compared this with spherical geometry, explaining how the sum exceeds 180 degrees on a curved surface, referencing the Girard’s theorem.

These examples underscore the depth of reasoning O1 brought to the table. However, O1 was still not perfect. Complex multi-step logic could fail under certain conditions, and the model was occasionally tripped up by ambiguous or poorly specified queries. Nevertheless, O1 laid the groundwork for what we can expect from the upcoming O3 series.

Announcing O3 and O3-mini: What We Know So Far

thank you to the external safety researchers who tested o3-mini.

— Sam Altman (@sama) January 17, 2025

we have now finalized a version and are beginning the release process; planning to ship in ~a couple of weeks.

also, we heard the feedback: will launch api and chatgpt at the same time!

(it's very good.)

In December 2022, OpenAI signaled its intent to build a new generation of reasoning models, tentatively called o3 and o3-mini. The official unveiling came more recently, when Sam Altman confirmed that o3-mini is finalized and set to launch in “a couple of weeks,” with API and ChatGPT versions rolling out simultaneously.

1. The Roadmap from O1 to O3

The transition from o1 to o3 was long-anticipated. While o1 was a marked improvement over earlier GPT-based models in reasoning tasks, the o3 line is poised to introduce:

- Greater Parameter Efficiency: Rumors suggest that o3 models incorporate architectural optimizations, allowing them to train more effectively on specialized reasoning datasets without exponentially scaling parameter counts.

- Enhanced Context Handling: O3 might feature an even longer context window than o1, enabling it to excel at tasks involving multiple, extensive text inputs or large data sets.

- Multi-Modal Reasoning (Potential): There is industry speculation that o3 could integrate not just textual data, but also images, tables, or other forms of input, enabling more advanced reasoning across heterogeneous data formats.

2. Why “Mini” Matters: O3-mini’s Potential Use Cases

A key question arises: Why release an o3-mini? Historically, “mini” models serve several strategic functions in the AI ecosystem:

- Cost-Effective Deployment: Smaller models are often less resource-intensive, allowing businesses with tighter budgets to deploy advanced AI solutions without breaking the bank.

- Faster Inference: For applications where speed is crucial—like real-time chatbots, embedded systems, or edge devices—a smaller model can deliver near-instantaneous responses.

- Customized Solutions: O3-mini could be fine-tuned for niche or industry-specific tasks. Its more compact architecture may allow for more efficient domain-specific training.

- Scalability: Organizations can adopt o3-mini as a stepping stone, testing advanced reasoning features before potentially upgrading to full-scale O3 solutions.

By simultaneously launching the API for o3-mini, OpenAI is likely aiming to accelerate developer adoption, fostering an ecosystem of third-party tools that leverage advanced reasoning in consumer and enterprise applications.

3. Anticipated Improvements and Innovations

While precise details about o3 and o3-mini remain under wraps, the following capabilities are highly anticipated:

- Automatic Chain-of-Thought Reasoning: Where O1 might have required prompts crafted to encourage chain-of-thought, o3-mini could do this autonomously, improving performance on multi-step problems.

- Enhanced Self-Correction and Reliability: Building on O1’s self-correction mechanism, o3-mini might show further refined logic checks and error handling, providing more consistent answers.

- Context Preservation in Extended Dialogues: For content creators, educators, or customer service systems, maintaining context across long and branching conversations is invaluable.

- Cross-Lingual Reasoning: The new model may further expand cross-lingual capabilities, improving reasoning quality for queries in multiple languages, which is crucial for global organizations.

Though these are speculative, OpenAI’s track record suggests that each iteration of its language model is significantly more advanced than the last. O3-mini is expected to continue that trend, likely to be overshadowed only by the more robust and parameter-rich O3 mainline model, which is slated for a later release.

Competition in the Advanced Reasoning Arena

1. The Alphabet (Google) Rivalry

OpenAI’s ambitions inevitably collide with Alphabet (Google), a heavyweight in AI research. Google’s internal labs, including DeepMind, have been pioneering advanced models such as PaLM and AlphaCode, boasting specialized capabilities in coding assistance and logic-based tasks. With the rise of large language models, Google has also integrated AI features into its search engine and productivity suite, intensifying the race to deliver the most capable AI assistant.

2. Microsoft’s Backing and Broader AI Ecosystem

Microsoft has invested heavily in OpenAI and integrated GPT-based technology into its products—from the Azure cloud platform to GitHub Copilot and Bing Chat. This partnership offers OpenAI a massive cloud infrastructure, supporting rapid experimentation and large-scale deployment of new models like o3-mini. Microsoft stands to benefit by baking advanced reasoning capabilities into its enterprise solutions, from Office 365 to Dynamics 365, capturing a vast commercial user base.

3. Other Players: Siri, Alexa, and Emerging Virtual Assistants

OpenAI’s foray into advanced reasoning also places it head-to-head with Apple’s Siri and Amazon’s Alexa, which are popular voice-based AI assistants. Though these systems have historically focused on simpler voice commands, the boundary between casual voice queries and deep, multi-step problem-solving is quickly blurring. As ChatGPT integrates with real-world tasks via features like Tasks (discussed later), Siri and Alexa could face an uphill battle if they fail to incorporate advanced reasoning to keep pace with user expectations.

Emerging players in the AI assistant space—including startup solutions and open-source efforts—add further diversity to the competitive field. However, OpenAI’s brand recognition, combined with robust institutional backing, positions it strongly to maintain a leadership role in advanced AI solutions.

User Feedback and Release Strategy

The decision to launch the o3-mini API and ChatGPT interface simultaneously addresses a critical piece of user feedback: the frustration developers have faced when new models were released for ChatGPT but not for API integration, or vice versa. This synchronization fosters a more cohesive ecosystem, allowing:

- Developers to immediately incorporate the new capabilities into their applications.

- End-users to explore the model’s strengths and limitations within the ChatGPT interface.

- Educational Institutions to set up trials, bridging the gap between user-facing chat environments and custom-coded solutions.

1. Launching APIs and ChatGPT Simultaneously

Previously, staggered releases meant that any breakthroughs discovered through ChatGPT usage might not be immediately implementable by developers in real-world applications. In some cases, API updates lagged significantly behind the ChatGPT interface. By merging these release timelines, OpenAI ensures that breakthroughs in advanced reasoning can be harnessed immediately across multiple platforms, speeding up innovation and adoption.

2. Importance of Community and Developer Input

OpenAI actively solicits feedback from a worldwide community of researchers, developers, and enterprise users. Frequent user complaints or suggestions—whether for improved reliability, advanced features, or better debugging tools—inform how OpenAI refines each new model. o3-mini specifically benefited from:

- Beta Testing with Researchers: A select group of external safety researchers tested o3-mini extensively, identifying potential pitfalls in edge cases, bias, or reliability.

- Developer Previews: Some high-profile developers received early glimpses of the model’s capabilities, shaping refinements in the API design and usage guidelines.

- Iterative Updates: User-driven feedback loops ensured that the final release version addressed critical issues identified in earlier builds, leading to a more polished end product.

This collaborative approach ultimately empowers OpenAI to create robust models that meet both academic and commercial needs.

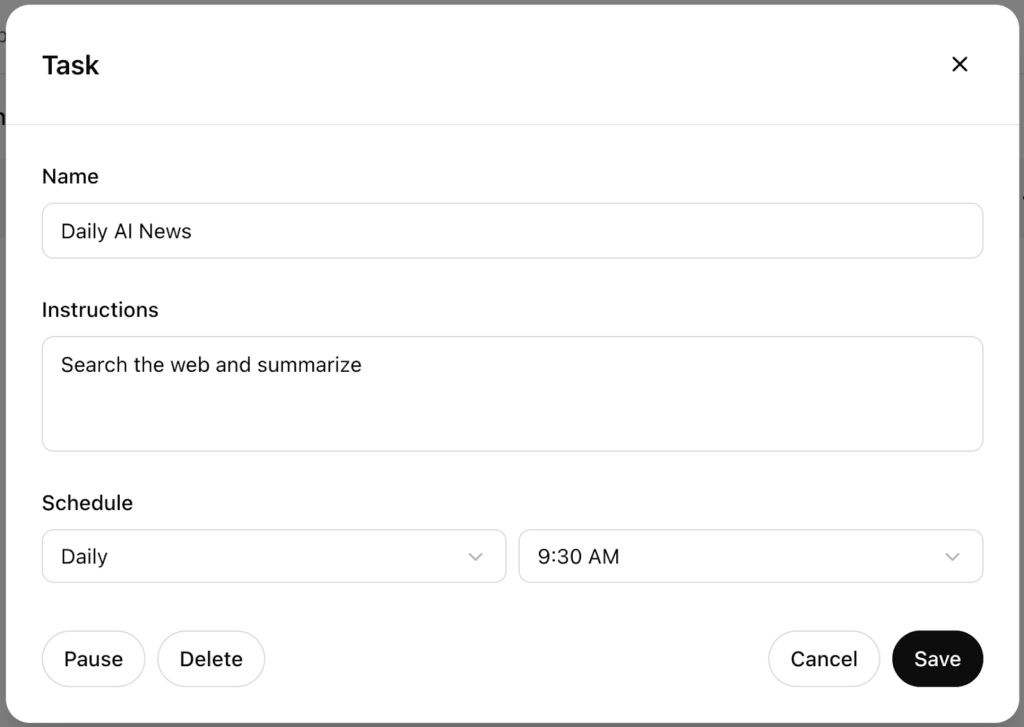

OpenAI’s Recent Beta Feature: Tasks in ChatGPT

Just before announcing o3-mini, OpenAI also revealed a new beta feature called “Tasks” in ChatGPT. This feature signals the company’s push into becoming a more versatile, virtual assistant capable of managing multiple steps and contexts in a single query.

1. What is “Tasks” and How It Works

The “Tasks” feature in ChatGPT allows users to break down a single objective into discrete subtasks. For instance, if a user wants to plan a vacation, the user can create a “Plan a Vacation” task and then add subtasks like:

- Select a Destination

- Book Flights

- Find a Hotel

- Plan Activities

ChatGPT keeps track of these subtasks, enabling structured, ongoing conversations about each item. This offers a more organizational approach to chat-based interactions, turning ChatGPT from a purely generative or Q&A system into a task management and execution assistant.

2. Potential Synergy with O3 and O3-mini

When combined with advanced reasoning, such as what o3-mini promises, the “Tasks” feature can become even more powerful:

- Complex Project Management: Teams could use ChatGPT and the o3-mini backend to plan multi-phase projects, relying on advanced reasoning to resolve bottlenecks or forecast potential risks.

- Research and Writing: Writers and researchers can break down a complex topic into sequential tasks—like literature review, data gathering, data analysis, and writing—letting the model track each phase meticulously.

- Real-Time Collaboration: If integrated with external services (for example, calendar apps or project management tools), an advanced ChatGPT with “Tasks” could automate or semi-automate scheduling, file organization, or resource allocation.

3. Competing with Siri and Alexa

Tasks positions ChatGPT to be more than a text-based interface; it’s effectively an intelligent workspace. Apple’s Siri and Amazon’s Alexa have dabbled in multi-step tasks, but usually with a narrower scope (e.g., voice commands for turning off lights or setting alarms). By focusing on advanced reasoning and broader integration, OpenAI could disrupt how people interact with digital assistants, offering a more nuanced, text-driven, and problem-solving-centric approach.

Investments, Funding, and the Growing AI Frenzy

The success of ChatGPT and subsequent models has fueled a frenzy of investments into AI startups and established companies alike. With each new breakthrough in NLP and reasoning, capital pours in to fund further research, product development, and commercialization.

1. $6.6 Billion Funding Round and Industry Implications

OpenAI’s reported $6.6 billion funding round in October signaled investor confidence that advanced AI models will be transformative across multiple sectors. This injection of capital:

- Accelerates Research: More funds mean bigger and more specialized data sets, improved computing resources, and faster model training cycles.

- Expands Commercial Partnerships: Organizations from healthcare to finance are eager to trial advanced AI for domain-specific tasks like diagnostic aids or automated auditing.

- Raises the Competitive Bar: Smaller AI ventures must either find niche markets or partner with larger entities to survive, as the research arms race becomes costly.

2. How Advanced Models Attract New Users and Capital

Each release of a more powerful model—be it o1, o3-mini, or the soon-to-come o3—tends to pull in both new users and new capital. The underlying psychology is straightforward: advanced AI opens up new business and innovative possibilities. Whether it’s automating customer support, analyzing vast datasets, or acting as a personal knowledge assistant, more capable models offer higher ROI for those investing in them.

3. The Role of Partnerships in Driving Innovation

Beyond Microsoft, OpenAI has forged partnerships with various research organizations, academic institutions, and enterprise companies. Each partner brings:

- Domain Expertise: Helping shape specialized training datasets and unique use cases (e.g., medical imaging data).

- Regulatory Insight: Guiding model deployment in sensitive sectors that require compliance with HIPAA, GDPR, or other privacy standards.

- User-Base Expansion: Integrating the AI models into existing enterprise platforms, thus rapidly scaling adoption.

This symbiotic relationship between industry leaders, startups, and research institutions propels AI innovation at a startling pace.

Looking Ahead: What the Future Holds for O3-mini

With o3-mini finalized and expected to launch in a matter of weeks, the global AI community is buzzing with speculation about its features, performance benchmarks, and potential use cases. The transition from o1 to o3 is viewed by many as a quantum leap in reasoning capabilities.

1. Potential Capabilities and Expectations

- Deeper Chain-of-Thought: We can anticipate stronger chain-of-thought reasoning, letting o3-mini tackle more layered logic puzzles or real-world tasks.

- Natural Language Debugging: A specialized improvement might be advanced debugging tools where o3-mini can auto-identify logical flaws in code snippets or text arguments.

- Adaptive Learning: Some speculate that o3-mini could include on-the-fly adaptation, meaning it may refine its logic after repeated interactions, effectively “learning” from user corrections in real-time.

- Context Switching: Another potential improvement is quicker context switching, enabling the model to jump between disparate subjects in a single conversation with minimal confusion.

2. Ethical and Safety Considerations

With increased complexity and reasoning power come heightened risks:

- Hallucinations: Even advanced models can generate plausible but false statements, potentially misleading users in critical areas like healthcare or law.

- Bias: Larger or more specialized training sets reduce certain biases but can introduce new ones. Safety researchers must ensure that o3-mini is fair and representative across diverse user populations.

- Misinformation: Enhanced rhetorical skills could make AI-generated misinformation more convincing. OpenAI has consistently taken steps to mitigate these risks, but oversight remains essential.

OpenAI’s existing safety frameworks, combined with external testing by domain experts, aim to keep o3-mini and its full sibling model responsibly aligned with user and societal needs.

3. Timeline and Next Steps

- Initial Release (~Couple of Weeks): OpenAI will roll out the o3-mini model to ChatGPT and through an official API, providing documentation, usage guidelines, and best practices.

- Community Feedback Phase: Early adopters, developers, and educators will stress-test o3-mini, identifying performance issues, user experience hiccups, and potential security concerns.

- Iterative Updates: OpenAI will release patches or updated versions as it integrates feedback, potentially unveiling new features like advanced chain-of-thought or domain-specific modules.

- Full O3 Launch: In the months following o3-mini’s debut, the fully-fledged O3 model—likely with more parameters and broader capabilities—will hit the market.

For those closely following OpenAI’s announcements, the next few months promise to be transformative. The synergy of user feedback, developer interest, and sustained funding almost guarantees rapid iteration and improvement in these advanced reasoning models.

Conclusion and Stay Tuned for Updates

OpenAI’s announcement of o3-mini marks another milestone in the evolution of advanced reasoning language models. Building on the foundation laid by earlier models like O1, o3-mini promises to deliver a more efficient, faster, and potentially more versatile solution for complex problem-solving across science, coding, mathematics, and beyond. By releasing the API and ChatGPT interface simultaneously, OpenAI addresses a critical user demand for immediate, seamless integration into diverse applications.

This launch occurs against the backdrop of intense competition, with tech giants like Alphabet (Google) and Apple all vying for leadership in AI. While Siri and Alexa pioneered voice-based interactions, ChatGPT’s new “Tasks” feature and upcoming advanced models like o3-mini are redefining what a virtual assistant can do—pushing the boundaries of multi-step reasoning and project management. Furthermore, the broader environment of large-scale funding—exemplified by OpenAI’s massive $6.6 billion injection—ensures that the pace of AI development remains rapid and relentless.

As we look ahead, the potential for o3-mini and the future o3 model to reshape industries and daily life is immense. From academic research to enterprise-level solutions, advanced reasoning models promise to streamline workflows, accelerate innovation, and even spark new use cases we have yet to imagine. At the same time, these developments underscore the importance of ethical guidelines and responsible AI deployment—a realm in which OpenAI continues to make measured efforts.

We’ll provide full reviews, detailed explorations, and use-case analyses once o3-mini is officially released and tested in the wild. Expect performance benchmarks, real-world developer feedback, and a deeper look into how o3-mini’s new features work in practice. Stay tuned for those comprehensive updates in the coming weeks, as we witness yet another leap forward in the AI landscape.

Final Thoughts

- O1 Recap: We saw a major step up in solving multi-step queries in domains like science, coding, and math.

- O3 and O3-mini: The next frontier, with rumored better reasoning, self-correction, and context management.

- Competition and Funding: Continued rivalry with Google, Apple, and an entire ecosystem of AI startups, fueled by colossal investment rounds.

- Implications: More efficient tasks, advanced project management, deeper chain-of-thought, and expanded real-world applications.

The o3-mini era of advanced AI reasoning is just around the corner. Whether you’re a developer eager to integrate new capabilities into your tech stack or an end-user curious about the latest breakthroughs, o3-mini promises to be a game-changer. Keep an eye on OpenAI’s official channels—and this space—for detailed reviews and real-world examples once the model officially goes live.

Stay tuned. This is only the beginning of what advanced reasoning AI can achieve. We will update you with a full review and deep dive as soon as o3-mini is publicly available, ensuring you have the insights you need to make the most of this exciting new model.